What is Compute Express Link?

Compute Express Link (CXL) is a breakthrough high speed CPU-to-Device interconnect, which has become the new industry I/O standard. CXL builds upon and supports PCI Express 5.0, utilising both its physical and electrical interface which allows for disaggregated resource sharing to improve performance whilst lowering costs. The road to get here however, was long and fraught with competition in what was known as the ‘interconnect wars’.

CXL was primarily developed by Intel as an interface to coherently link CPUs to all other types of compute resources. Over time Intel joined forces with other industry giants to form the CXL consortium to jointly develop this interface into a new open standard. Competing against CXL has seen CCIX, OpenCAPI, Gen-Z, Infinity Fabric and NVLink interconnect technologies. This had somewhat fractured the industry in backing their own interconnect standards. Within the last couple of years CXL has reached critical mass and emerged as the clear winner as the industry has recognised the need to consolidate behind a single interconnect standard. The CXL consortium has every major semiconductor and hyperscaler onboard now; on its Board of Directors are the following:

As you can see, all the industry behemoths are onboard with this new open standard. Compute Express Link is set to be a turning point in the way that we all offer computing structures and solutions going forward.

What problem does it solve?

So, Compute Express Link sounds like it might be a big deal, but what problems does it solve exactly?

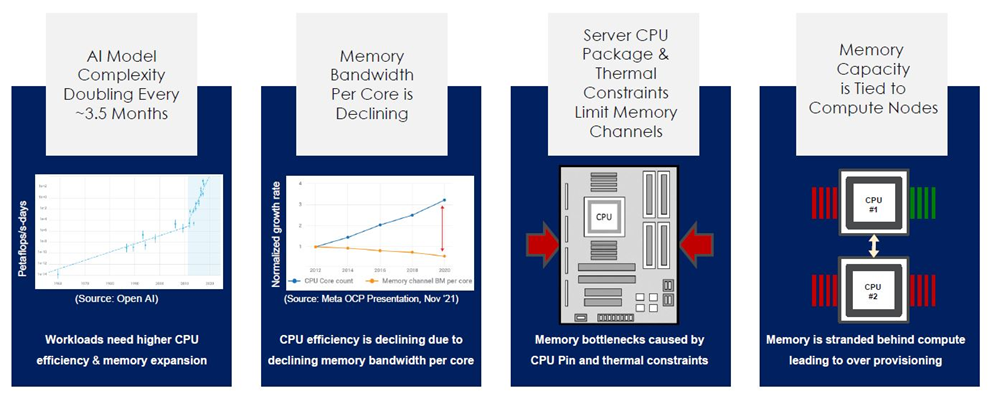

We’ve all seen how CPU core counts have rapidly increased over the last decade and this is set to continue with everyone now adopting chiplet architectures. However, the memory channel bandwidth per core has not been keeping pace and is falling behind. This is despite efforts to add channels by use of larger sockets, thicker PCB layer boards and bigger form factors. We have now come to physical constraints on all these fronts.

Datacentres and hyperscalers are, and have been, huge consumers of memory, but despite this large cost there has often been poor utilisation of memory resources. In fact, Microsoft has stated that Dynamic Random Access Memory (DRAM) accounts for half of their server costs. Despite this huge DRAM cost - up to 25% of it is left stranded as it is provisioned for worst-case scenario workloads. In short, 12.5% of some hyperscalers costs are being wasted currently, costing many billions of dollars.

From this we can see that there is a clear drive to be able to dynamically use memory on VM’s spread across many CPUs and servers, rather than residing next to each CPU. Utilisation rates would be greatly increased as memory bandwidth adjusted to the demands of the workloads. CXL promises to allow for more efficient use of resources and it’s not just for memory, it will be used for all types of heterogeneous compute and networking soon.

How does it work?

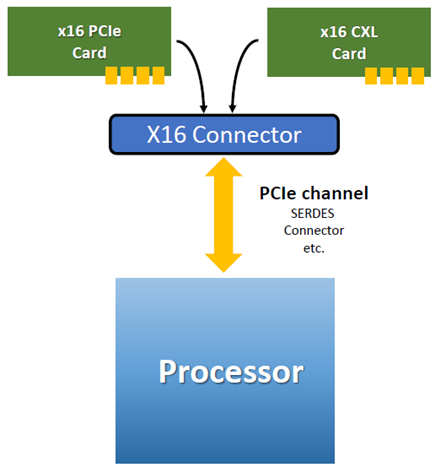

Compute Express Link is an open industry standard that leverages the existing and ubiquitous PCIe infrastructure. This enables a high bandwidth and low latency interconnect between CPUs and devices, it will also allow memory coherency between CPU’s memory and attached devices’ memory. This therefore allows resource sharing for optimum performance and lowering overall system costs.

First generation CXL will allow 32Gbps on the PCIe 5.0 interface and will auto-negotiate whether to use the standard PCIe protocol or alternate CXL transaction protocol as necessary. The opportunities that CXL presents are expected to be a key driver for an accelerated timeline to PCIe 6.0 where bandwidth will be doubled, and more innovation will be allowed to proliferate.

CXL protocols and use cases

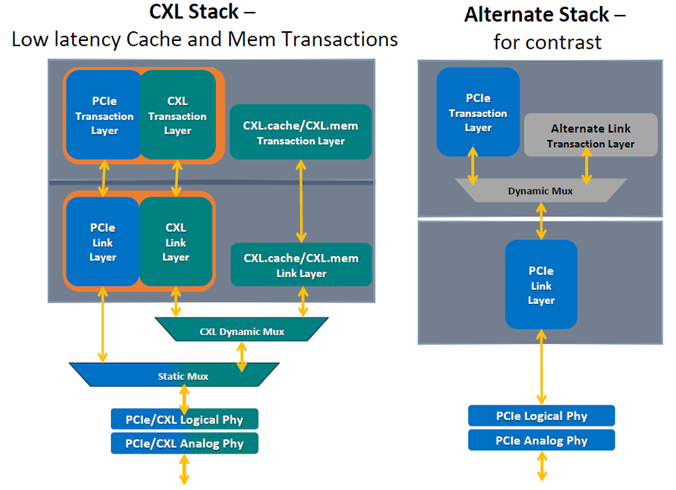

The Compute Express Link transaction layer is comprised of three dynamically multiplexed sub-protocols on a single link; CXL.cache, CXL.memory and CXL.io.

CXL.io passes through the CXL stack essentially the same as the standard PCIe stack and is used for link initialisation and management, device discovery, enumeration, interrupts, DMA and register access. It’s mandatory for all CXL devices to support the CXL.io protocol.

CXL.memory protocol is responsible for providing a Host processor with direct access to device attached memory using load/store commands. This allows the CPU to access memory coherently from a GPU or another accelerator card, as well as access to storage class memory devices.

CXL.cache protocol on the other hand allows for a CXL device – like a CXL compatible accelerator to access and cache processor memory. Basically, allowing something like a GPU to coherently access and cache CPU memory. Both CXL.cache and CXL.memory operate using fixed message framing on a separate transaction and link layer from CXL.io, this facilitates for lower latencies across this layer.

The use of these three protocols enables a more efficient approach to a systems’ resources, especially for heterogeneous computing as memory can be addressed fluidly between processors and CXL devices.

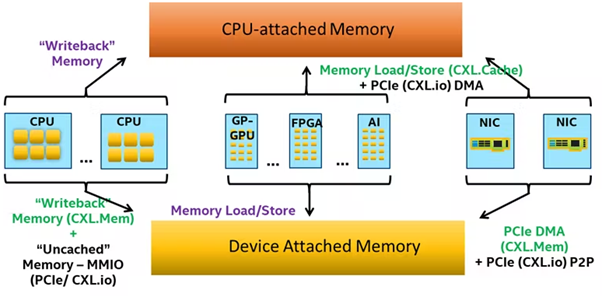

Below is a diagram illustrating how CPU-attached memory and Accelerator-attached memory becomes available in a flexible manner, whereas in the past each components memory was siloed.

CXL devices and supported platforms

Compute Express Link is designed to support three primary device types:

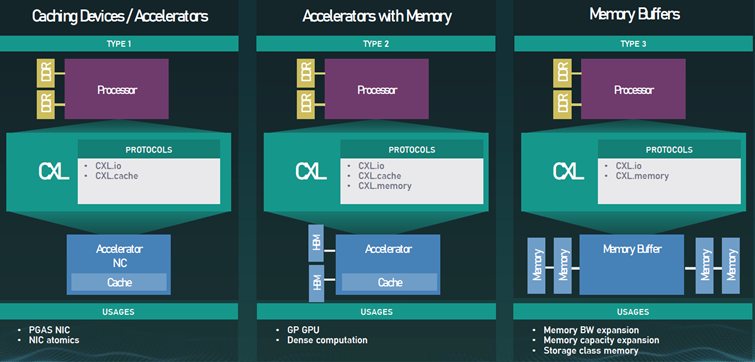

Type 1 – uses CXL.io and CXL.cache. This is designed for specialised accelerators such as a smart NIC with no local memory. These Type 1 CXL devices rely on coherent access to CPU memory.

Type 2 – uses all three CXL protocols. These are general purpose accelerators (GPU, ASIC or FPGA) with high performance local memory such as HBM. Both the Type 2 CXL devices and host CPUs are able to coherently access each other’s memory.

Type 3 – uses CXL.io and CXL.memory. These are for memory expansion modules and storage class memory. These Type 3 CXL devices provide the host CPU with low latency access to further local DRAM or non-volatile storage memory.

As Compute Express Link is supported from PCIe 5.0 onwards, both Intel and AMD have slated support with their respective Xeon & EPYC PCIe 5.0 hardware with the upcoming release of Sapphire Rapids (Intel) and Genoa/Bergamo/Siena (AMD).

With regards to supported CXL devices we are still in the early stages as Intel & AMD are both yet to release their CXL supported processors. Therefore many device vendors are still in their proof-of-concept stage of development. There are however some CXL devices that have just been released such as:

Samsung’s 512GB CXL module using the E3.S form factor. This allows for up to 16TB of memory expansion per CPU at low latencies.

There are even vendors discussing and showcasing CXL 2.0 products, but that’s a topic to cover at a later date!

Here at Boston, we are partners with many of the companies that sit on the Board of Directors for Compute Express Link – so we’re eager and ready to embrace the new CXL era of computing.

Want to know more? Get in touch with the team today!

Author:

Sonam Lama

Senior FAE, Boston Limited