With the headline launch of the NVIDIA Ampere A100 Tensor Core GPU earlier this year and the new flagship NVIDIA DGX™ A100 system designed for AI workloads featuring eight NVIDIA Ampere A100 Tensor Core GPU’s (read our unboxing article on the DGX A100 here), NVIDIA also released a sister product codenamed ‘Redstone’. This is a lower cost alternative which enables the GPU processing power of an NVIDIA HGX based system to be brought to a much wider enterprise AI/HPC audience. The Supermicro 2124GQ-NART is a compute powerhouse designed for enterprise AI, machine learning, deep learning training & inference and High Performance Compute workloads whilst featuring four NVIDIA Ampere A100 Tensor Core GPUs, two AMD EPYC™ 7002 Rome CPUs, up to 8 TB of memory and 800 Gb/s of network connectivity as standard in a small 2U chassis.

The Supermicro 2124GQ-NART is 33% the size of the NVIDIA DGX A100, 2U compared to 6U, containing 50% of the number of NVIDIA Ampere A100 Tensor Core GPU’s and 50% of the network connectivity of the larger system, the same number of CPU cores and supports for 400% more memory. The Supermicro 2124GQ-NART is truly an impressive option for any business looking for a GPU accelerated solution.

As you can see from the above image, we received the Supermicro 2124GQ-NART system into the labs at Boston and have been hard at work preparing it for demonstration and testing use by our partners and key customers. If you would like a trial of this system or any of our NVIDIA Ampere A100 based systems please let us know, we recommend you act fast as demand for these systems is highly anticipated!

Reviewing these systems, you can see from the front 4 hot-swap tool-less SATA/SAS/NVMe drives that this is the only storage present, and is used for OS and application installation and caching. These systems are not intended as storage or general-purpose platforms, but high power GPU compute/AI, ML/DL training & inference and HPC platforms. There is a pull-out plastic tab below the drive bays which features the IPMI 2.0 out of band management interface credentials which, if desired, is removable. You can also see the 4 hot swap tool-less dual fan enclosures which pull straight out without needing to remove the 2-part interlocking lid. The throughput of these fans is exceptional and the airflow during boot time is loud. Once booted the system settles down and the noise level is equivalent to any 2U system. At present we have not been able to raise temperatures to the point of these fans needing to be ran at high speed. Cooling in this system is outstanding.

From the back you can see the rear lid pull handle just below the punched grill which offers additional exhaust capacity. The handle makes it easy to slide and remove the rear panel. The lid for this system is 2 part and requires the removal of screws rather than a simple catch and slide system. This provides extra structural strength to the system. From the rear you can also see the ports for the four NVIDIA Mellanox (NVIDIA acquired Mellanox in 2020) MCX653106A-HDAT ConnectX-6 adapter cards that provide a combined 800 Gb of network bandwidth. These cards support NVIDIA Mellanox’s VPI (Virtual Port Interconnect) technology allowing the port to support either 800 Gb/s of HDR Infiniband or 800 Gb/s of 200 Gb Ethernet allowing for storage flexibility.

If you look closely to the left of the 4 network cards you will see 6 circular cut-outs. This indicates Supermicro likely intended for this system to be water cooled in the future and we are seeing the introduction of more and more water-cooled CPU and GPU systems. Unlike the consumer space, the radiator and fan units are often not mounted internally but in this case externally, either within the rack or out of rack elsewhere within the data centre. This allows higher power CPU/GPU’s to be used within a given space and using external cooling lowers the cooling costs as well as also allowing these cooling components to be reused on multiple generations of systems; furthering lowering the life-time costs of a data centre. If needed, the vented panel that covers the ports can easily be removed by unscrewing the 4 screws and removing the panel.

You can also see the 4 black flip toggles with white arrows allowing the 2 PCI-Express card holders/risers to be easily removed, supporting a total of 4 PCI-E Gen 4.0 x16 LP and 1x PCI-E Gen 4 x 8 LP slots which are connected to the motherboard and not directly via a socket but by SlimelineLP cables. The PCI card holders themselves are extremely easy to remove and replace as the card holder/riser is held in place using the previously mentioned cables, not inserted into a slot and held in place with friction.

There are 2 x 10 Gb onboard network interfaces, VGA, 2 x USB 3.0 ports and an IPMI 2.0 out of band management interface. This system like all new Supermicro designs features the new AST2600 dual core baseband management controller which provides faster POST times, increased security BIOS/IPMI firmware verification at boot and an improved modern looking web interface.

The BIOS ROM chip is a 256MB. Most systems at present typically feature a 32 MB or 64 MB ROM whereas the Supermicro 2124GQ-NART represents a large jump in ROM size.

The Supermicro 2124GQ-NART is a bleeding edge system which features dual AMD EPYC™ Rome 7002 processors. These CPU’s have 64 cores, 128 threads, base clock of 2 GHz with a max. boost of 3.35 GHz, 8 memory channels supporting a memory frequency of 3200 MHz. With a specification like this, it is easy to see why AMD’s EPYC™ Rome CPU’s have taken the high performance market by storm and are rivalling Intel once more. The AMD EPYC™ CPU’s are essential to this system as they have the necessary number of PCI-E lanes, 128 lanes per CPU and support for PCI-E gen 4.0. Fourth generation PCI-Express again doubles the bandwidth of it’s predecessor providing the 2124GQ-NART with 500 GB of PCI-E gen 4.0 bandwidth and is essential for the CPU to utilise the high performance of the four NVIDIA Ampere A100 Tensor Core GPU’s and each of their 40 GB of HBM2 memory and the 800 Gb/s of network connectivity.

Memory bandwidth is an often-overlooked aspect of compute and as they say in this space, ‘you have to be able to feed the beast’. These systems support 8 TB of DDR4 ECC registered memory at 3200 MHz and each CPU has 8 memory channels providing an unprecedented amount of memory bandwidth.

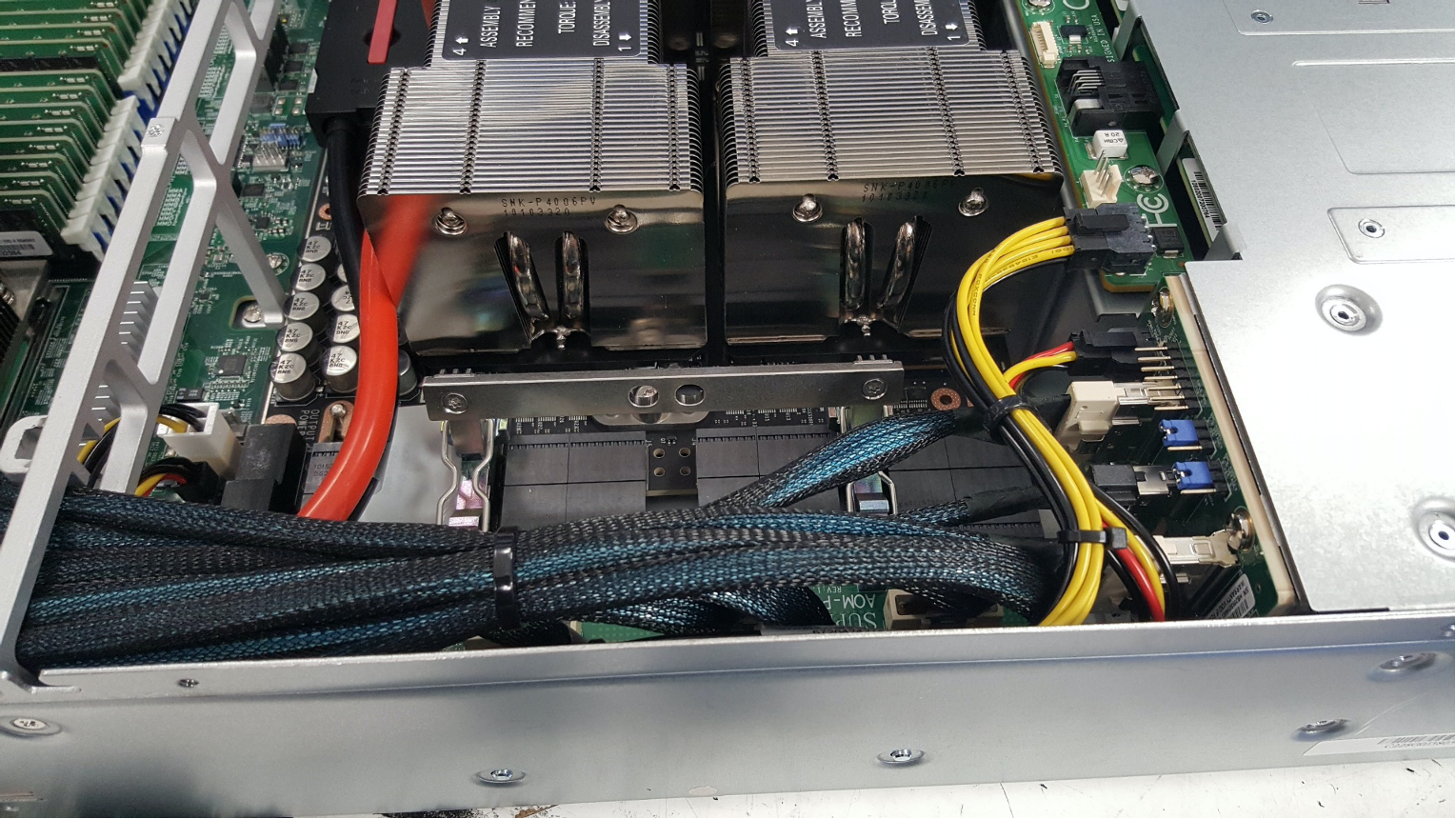

Once we removed the lower part of the interlocking 2-part lid and the heatsink shroud, we can see that the Supermicro 2124GQ-NART is a GPU Behemoth, featuring four socketed (SXM4) NVIDIA A100 Tensor Core GPU's; each with 40 GB of HBM2 memory. Each of those four large heatsinks successfully dissipate 400W of heat. The GPU’s are situated on a daughter board powered by the large red and black power cables visible below to the left of the GPU heatsinks.

Above the GPU heatsinks we can see the 32 memory slots supporting up to 8TB of DDR4 ECC registered memory at up to 3200MHz feed by the dual AMD EPYC™ Rome CPU’s 8 memory controllers providing a vast amount of memory at a high speed for customer workloads. You can also see the rigid support running from side to side to prevent chassis flex. The interlocking 2 part lid also screws into this support to offer maximum rigidity. This system has an extremely high build quality. Visible against the left wall of the chassis and passing through the mid chassis support are a number of dark blue SlimlineLP cables which provide the data path from the motherboard to the GPU daughter board.

The below image illustrates the airflow priority of these GPU compute intensive systems. Unlike a traditional large 4U GPU based system which might feature several PCI-E cards at the rear of the system receiving the cooling airflow second hand. In the Supermicro 2124GQ-NART we can see the airflow priorities are; GPU first, CPU’s second, PCI Express cards third. As it should be, the elements doing the most work receive the coolest air. Supermicro engineers have again outdone themselves by packing in as much performance as possible and still successfully keeping it cool.

Here we can see more illustrations of how well built the Supermicro 2124GQ-NART is - we can clearly see the thickness of the mid-chassis support, the size of the NVIDIA A100 daughter board power connector and cables and the robust pull handle that locks the daughter board into position. This can be seen below in the unlocked position.

In the below image you can see one of the dual 2200W titanium level power supplies with Smart Power Redundancy as well as the vented design that helps with overall system airflow. 3000W versions are coming soon. Each power supply is removed with the use of a level arm instead of a lock latch design. This makes removal of the power supplies easy.

Summary:

The Supermicro 2124GQ-NART is a GPU powerhouse designed for the most intensive Artificial Intelligence, Machine Learning, Deep Learning training & inference and HPC workloads. At the moment NVIDIA dominates the GPU accelerated space. This system is an exciting entry into this space featuring four NVIDIA Ampere A100 Tensor Core GPU’s inter connected via 3rd generation NVIDIA® NVLink®, and providing 50 GB/s of inter GPU bandwidth and an aggregate inter GPU bandwidth of 600 GB/s, as well as a 160 GB of total HBM2 graphics memory. This would not have been possible without the dual AMD EPYC™ Rome 7002 processors and their combined 256 PCI-Express gen 4.0 lanes providing 500 GB/s of bandwidth. The 8TB of supported DDR4 ECC registered memory at 3200 MHz will support even the largest workloads and the 800 Gb/s of HDR Infiniband or 800 Gb/s of 200 Gb Ethernet network bandwidth will ensure any workload can be fed with data.

To arrange a trial of the Supermicro 2124GQ-NART, the NVIDIA DGX A100 or the DGX Station, please get in touch with our team at Boston Labs who will arrange a testing slot.

Written by

Dan Rogers, Field Application Engineer, Boston Limited.