Contents:

- What is Intel® Optane™ DC Persistent Memory?

- Where has Intel® Optane™ DC Persistent Memory come from?

- Architecture considerations

- Hardware Platform

- Supported OS's (as of 01/05/19)

- Storage Pyramid

- Operating modes

- Capacity sizes

What is Intel® Optane™ DC Persistent Memory?

Intel® Optane™ DC Persistent Memory (DCPMM) memory is an emerging technology where non-volatile media is placed onto a Dual In-Line Memory Module (DIMM) and installed on the memory bus, traditionally used only for volatile memory.

This new persistent memory is designed to exist on the same bus alongside volatile memory such DRAM and often works in conjunction with it to achieve higher overall system memory capacity or to allow it to achieve better performance through DRAM caching.

The key thing that differentiates persistent memory DIMMS from DRAM DIMMs is that the data stored on them can be retained when the system is shut down or loses power. This allows the technology to be used as a form of permanent storage like Hard Disk Drives (HDDs) or Solid-State Drives (SSDs), but with memory-like latencies. Intel™ DCPMM is based on the Intel® Optane™ Memory technology and provides the ability to keep more data, closer to the CPU for faster processing (or that is, "warmer").

DCPMM is designed for use with the Second-Generation Intel® Xeon® Scalable Platform processors code-named Cascade Lake.

Where has Intel® Optane™ DC Persistent Memory come from?

DCPMM has had a long route to market. For those that have been following this may not be new but equally, it may tie up some previously heard of technologies or terms that have been in the press over the last 4-5 years. DRAM has been on the market in various generational changes since the mid to late 1940s and while progress on speed/capacity marches on. The underlying technology was largely the same to this day. NAND has had a shorter history but still been around since the mid-1980s.

Intel® and Micron worked closely together and introduced a new type of memory technology, coined 3D Xpoint in 2015. This is a new technology that can function in a similar way to DRAM and NAND depending on the application but has been stated to be in theory 1000x faster and 1000x more endurant than NAND flash memory.

3D Xpoint was then marketed and packaged into an Intel® storage/NAND replacement product for desktop/enthusiast use called Optane memory - which should not be confused with DCPMM, more about that below. Optane memory originally got released in the form of two M.2 modules in 16GB and 32GB capacities, and these were placed in the market as an HDD performance accelerator and not as a direct memory extension or replacement.

Additionally, a data centre/enterprise, ultra-high performance and endurance NVME based SSD was brought to market using Optane memory under the guise of the Intel® Optane™ SSD DC P4800X series.

Available in capacities up to 1.5TB and with a performance in excess of a half a million IOPS and 2GB/s throughput the Optane series is the absolute definition of a storage flagship product.

(See here for more information: https://www.intel.co.uk/content/www/uk/en/architecture-and-technology/optane-memory.html)

https://www.intel.com/content/www/us/en/products/memory-storage/solid-state-drives/data-center-ssds/optane-dc-ssd-series/optane-dc-p4800x-series.html

In April of 2019 Intel® then announced the DRAM expansion [product based on Optane - DCPMM] along with the 2nd Generation of Intel® Xeon® Scalable Processors Family. Before release, Intel® products get designated code names and for these products, those were Apache Pass and Cascade Lake respectively. With the product so hotly anticipated and discussed at length before being brought to market, you might hear these used regularly to describe those products by media and professionals regularly.

Intel's DCPMM is the implementation of 3D Xpoint which coexists with DRAM on the memory bus. It can be used both as DRAM alternative but can also be used as a high performance and low latency storage device.

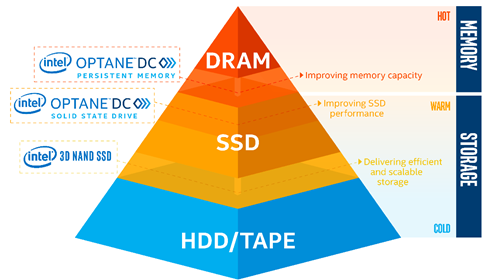

The Storage Pyramid

To fulfill the ever-growing requirements of the age of Big Data, Intel foresaw that the existing storage and memory technologies of today would not satisfy the changing demand of tomorrow. The need for a new tier of storage that sits between today's SSD and DRAM in the price, capacity and latency stakes was clear, and Intel® were keen to fill it.

The infographic storage pyramid below explains the hierarchy of these tiers:

At the top of the fastest tech, CPU cache, followed by DRAM, DCPMM, NVME, HDD and finally the slowest, our old reliable that like a friend who never seems to want to leave the party, magnetic tape.

Architecture considerations

While 2nd Generation of Intel® Xeon® Scalable Processors is required to run DCPMM however not all the Xeon SKUs are made equal. Platinum and Gold SKUs all support Intel Optane DC Persistent modules but Silver and Bronze do not. With one exception being the Intel Xeon Silver 4215, which can support the modules making it the lowest spec SKU that’s DCPMM capable. X11 Generation Supermicro systems are the only generation of platform that can support DCPMM providing that the latest BIOS/IPMI is used.

Supported Total Memory Capacities

As covered in the Hardware Platform section the Second Generation of Intel® Xeon® Scalable has four different product ranges which denote varying feature set levels. Within these ranges, the SKU's can come in multiple favours for Memory capacity per Socket. The naming convention for this is as follows:

- No suffix = 1TB

- M = 2TB/Socket memory tier

- L = 4.5TB/Socket memory tier

To put this into context let's use the Intel's flagship processor the 8280 and the three sub SKUs that are available.

If you require 768GB per CPU of total memory capacity, then the standard Intel® Xeon® Platinum 8280 is required.

If you require 2TB per CPU of total memory capacity, then the standard Intel® Xeon® Platinum 8280M is required.

If you require 4.5TB per CPU of total memory capacity, then the standard Intel® Xeon® Platinum 8280L is required.

These considerations are applied to both DDR4 and DCPMM capacity.

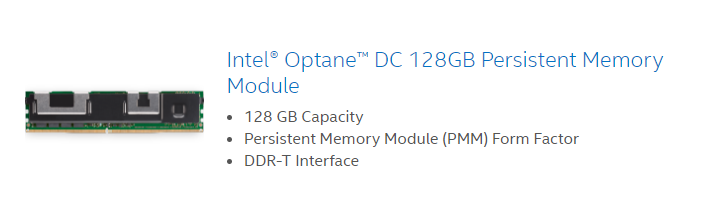

Intel® DPMM is available in capacities from 128GB as of publishing.

Supported OS's (as of 01/05/19)

Intel® DCPMM is supported by the latest versions of the most popular server/enterprise operating systems which are available in the market today.

- Microsoft Windows Server 2019

- Red Hat Enterprise Linux 7.6

- SUSE Linux Enterprise Server 12.4

- SUSE Linux Enterprise Server 15

- VMware vSphere Hypervisor (ESXi) 6.7 U1

- Ubuntu LTS 18.04

Intel® DCPMM can operate in a number of distinct modes. Whilst use as a typical memory extension is available there are also modes which are optimised for different use cases.

In Memory Mode, DCPMMs act as volatile system memory under the control of the operating system. Any DRAM in the platform will act as a cache working in conjunction with the DCPMMs.

In-App Direct Mode, DCPMMs and DRAM DIMMs act as independent memory resources under direct load/store control of the application. This allows the DCPMM capacity to be used as a byte-addressable persistent memory that is mapped into the system physical address space (SPA) and directly accessible by applications. This also enables the DCPMM to be utilised as ultra-low latency and high-performance block storage device.

In Mixed Mode, a percentage of the DCPMM capacity is used in Memory Mode and the remainder in App Direct Mode.

Operating mode architecture considerations

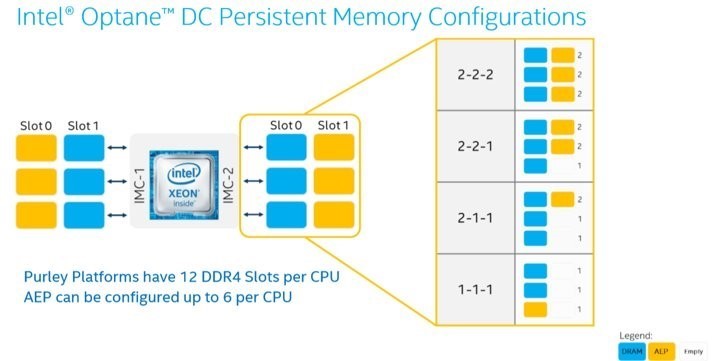

In the Xeon® Scalable Processor Family, each CPU has two IMCs (Integrated Memory Controllers) each providing three memory channels for a total of six channels over a dual-socket system.

For example, 12x DDR4 2933MHz for 2nd Intel® Xeon® Scalable for a Dual socket system or 6x DDR4 2933MHz for a single socket.

Traditionally when architecting a DRAM system, you would normally ensure at least 1 DPC (DIMMs Per Channel) and this would be noted by the term 1 DPC, 1.5 DPC, or 2 DPC depending on the exact layout you chose to deliver the capacity you need. Not populating a channel or unbalanced channels can result in poorer performance, so is generally avoided.

This architectural best practice does not change significantly when installing both DIMMS and DCPMM. However, with DCPMM there are additional population rules to follow around the number of DCPMMs relative to the number of DIMMs and the population of memory channels respectively.

The result is a set of ratio recommendations depending on the mode you wish to use; App direct, Memory or Mixed mode. Ratios are shown by Intel in the notation below - either 2-2-2, 2-2-1, 2-1-1 and 1-1-1 as shown in the diagram below.

Populations rules change depending on system/motherboard slot configuration so it’s important to check each model individually. Further, it also depends on the mode you wish to use and the capacity of DRAM / DCPMM you wish to populate.

For example, a good combination of memory mode to achieve 1TB of overall capacity using a 1-1-1 population model follows:

| Qty | Memory Type | Capacity |

| 12 | 16GB DDR4 Registered ECC Memory | 192GB |

| 46 | 256GB Intel DCPMM Memory | 1TB |

| Total Usable Memory Capacity | 1TB |

This gives a ratio of 5.3:1 of Memory Mode Capacity to DRAM cache and the same configuration would give up to 1TB of App Direct Persistent Memory capacity + 192GB of DRAM. At the other end of the scale too achieve 6TB of overall capacity using a 2-2-2 population model follows:

| Qty | Memory Type | Capacity |

| 12 | 64GB DDR4 Registered ECC Memory | 768GB |

| 12 | 512GB Intel DCPMM Memory | 6TB |

| Total Usable Memory Capacity | 6TB |

This gives a ratio of 8:1 of Memory Mode Capacity to DRAM cache and the same configuration would give up to 6TB of App Direct Persistent Memory capacity + 768GB of DRAM.

These are just two examples of capacity and ratio which are achievable. There are many different configurations that can be configured depending on the application (Memory Mode, App Direct or Mixed) and the number of DIMM slots the system many have.

It's important to consider the application when deciding on a capacity and eventual ratio of DRAM to DCPMM and many cases are different. The team at Boston are ready to help you make the right choice, so get in contact and we’ll guide you through the decision making process.

A wide range of systems supporting Intel® Optane™ DCPMM are available from Boston today, ranging from 1U units, high-density storage, high capacity storage or even blade.

If you would like more information or design and architecture help around Intel's Optane™ DC Persistent Memory, then we'd be keen to hear from you. You can get in touch below:

Further information on Intel DCPMM and compatible products can be found on our website here.